Good morning,

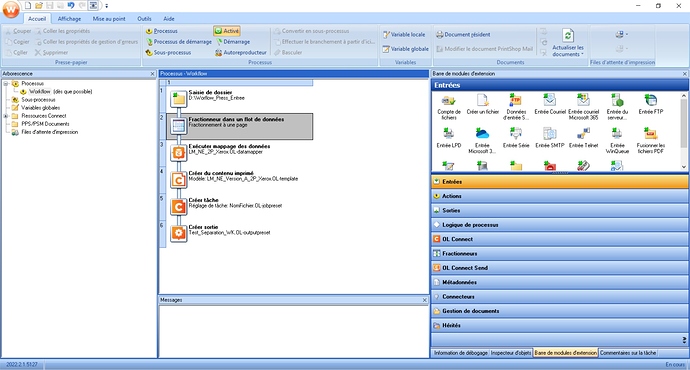

Here is the process I use to generate the production files.

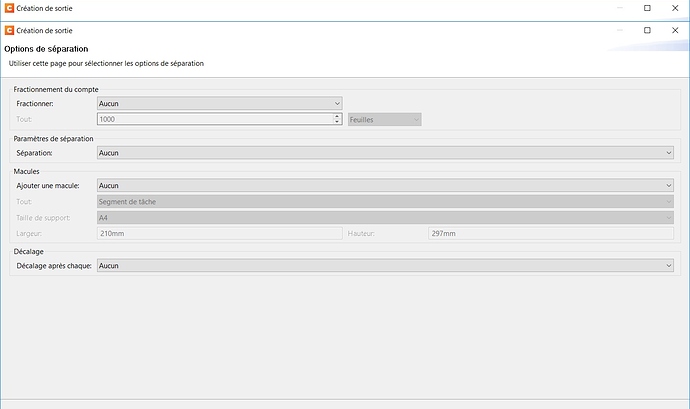

The separation is created via a task creation, it is parameterized by 1000 sheets.

In the case where we have a file of 50,000 sheets, all the production files arrive at the same time at the end of the process.

Est-il possible de les faire apparaitre au fur et à mesure ?

Thanks

Hello @JulienBat,

To achieve this you’d need to first split your data into chunk.

Hello,

If I understand correctly, should I split the csv files upstream?

Wouldn’t there be a setting to add to the process?

Thanks

You would need to use a data splitter, if this is what you mean by “setting”.

How is the data working? Is it 1 CSV line per record? Or is there grouping done on a field change?

It’s a flat file in csv, one line per record, there is no grouping, I send the entire file. “By parameter”, I meant to add an action to the workflow process, is that necessary so that split PDFS arrive gradually?

Thanks

@JulienBat: note that if you create 10 000 separate jobs (because your CSV has 10 000 lines), the entire production will be much less efficient than processing a single job and splitting it through Job Creation. So you will be slowing down the production in order to see each file appear one by one, instead of all at once.

Perhaps you could split your CSV file into larger chunks (for instance, 50 lines per chunk) so that each chunk would generate 50 documents at once? This would have less of an impact on performance.

If you could explain a bit more why you need those files to be generated one by one, it would help us determine if there are better methods for processing the jobs.

Hello Phil,

I work in sending advertising mailings, we send mailings for printing.

We mainly work in imposition from flat csv files, and for production constraints we have to separate the PDFs into batches of 1000 sheets.

Currently we are waiting for the end of the process to recover all the print files.

I wanted to know if it was possible for them to arrive gradually, so that production could start in parallel.

I hope my explanations will be clear?

Thanks

If your template creates a single sheet per record, then you can split your data file into chunks that contain 1000 lines each. This will create PDFs sequentially, and you can start processing the first ones before the last ones have been produced.

But if your template creates a variable number of sheets for each record, then you have to process the entire file at once because the imposition depth (1000 sheets, in your case) might fall in the middle of a record.

Thank you for your explanations, it seems clear to me

Hello Phil,

Currently, we create these blocks of 1000 contacts in advance, by creating several csv files of 1000 contacts. How can I automate this via the datamapper or workflow?

Thanks

Automate what exactly? The creation of CSV files?

Good morning,

Currently for a production of 10,000 contacts, we create 10 csv files of 1000 contacts. At the time of the workflow, we place the 10 files manually on the workflow, which allows our workshop to start production without waiting for the entire file.

Is there a way on Press so that I no longer have to split csv files upstream, and so that our workshop is updated as I go?

Thanks

In your scenario as you’ve explained, you simply need to have Workflow do the splitting of the main file into multiple CSV files. The splitting step in Workflow will act as a loop, which will create your final output one splitted file at the time so while the Connect Server generate your end PDF file from a splitted CSV input file, the rest of the Workflow will still loop and call the Connect Server to create new output file.

To complete @jchamel’s suggestion, you could use an Instream splitter task to split your 10,000 contacts into 10 files of 1000 contacts as shown below.

We have installed splitting in the workflow, see below.

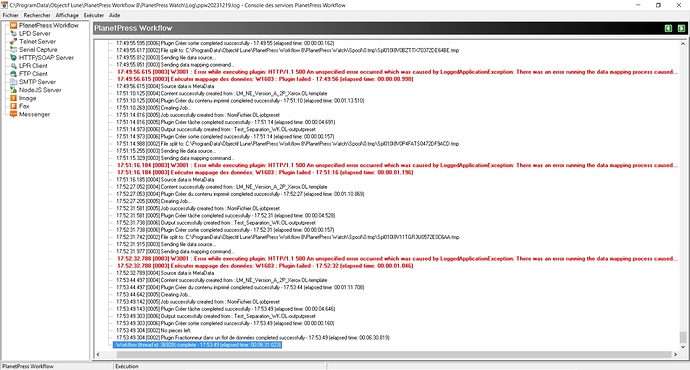

On a file of 4000 contacts, there is indeed a PDF which is generated, it includes contacts 1 to 1000, but I cannot get the following batches, each time it overwrites the existing PDF, with the same sequences.

I hope to be clear, you will surely find more details in the images.

Thanks

In your workflow process, immediately after your splitter task, add a Set Job Infos and Variables task to store the value of the %i iteration variable into JobInfo %9:

The %i variable contains a numeric value that gets automatically incremented with each iteration of a splitter or a loop task. Storing that value inside a JobInfo will allow you to use it as part of the output filename (instead of using ${file.nr}, which only changes when the output preset itself creates multiple files, which is not the case here):

After tests, we have 3 output files, but they are identical.

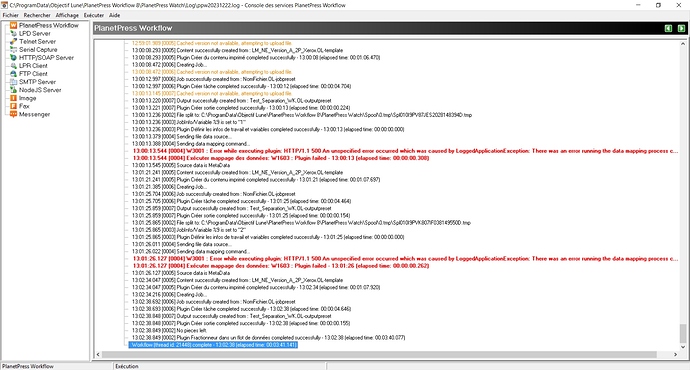

On an address file of 3000 contacts, this generates 3 PDFs with sequences from 1 to 1000. We cannot get the PDFs with sequences from 1001 to 3000. Here is the error message on the services console.

Thanks

Can you please click with the right mouse button on the error line which starts with “13:00:13.544 [0004] W3001” and copy-paste the error into your forum post? Because the end of the error line isn’t visible in the provided screenshot of the PlanetPress Workflow Service Console application.

13:01:26.127 [0004] W3001 : Error while executing plugin: HTTP/1.1 500 An unspecified error occurred which was caused by LoggedApplicationException: There was an error running the data mapping process caused by ApplicationException: Error executing DM configuration: Error occurred [Record 1, Step Tout extraire, Field NUMPLI]: An error occurred while trying to find the document column [NUMPLI] (DME000062) (DME000216) (DM1000031) (SRV000012) (SRV000001)

13:01:26.127 [0004] Exécuter mappage des données: W1603 : Plugin failed - 13:01:26 (elapsed time: 00:00:00.262)

Seems like in your Datamapper, your column named NUMPLI cannot be found by your extraction step named Tout extraire.

Can you share an anonymous version of your Datamapper?