“There has to be settings that work best depending on CPU numbers, memory amount, and so on”

Unfortunately, there aren’t because one of the greatest impacts on performance comes form the type of jobs you are running. Lots of small jobs require different settings than a smaller number of large jobs. And of course, a mix of large and small jobs require further fine tuning.

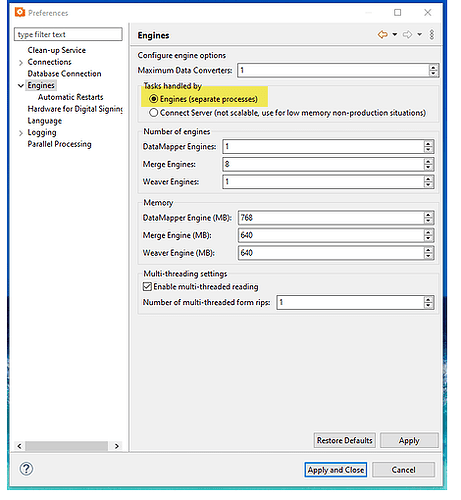

Starting with Connect 2019.2, the Connect Server Configuration application makes it easier to properly configure those settings by selecting an appropriate preset. However, if you still want to customize the settings, here are a few rules of thumb:

Engine memory

- DataMapper: engine memory should only be increased when dealing with large individual records. The size of the entire job is irrelevant. Each job is processed by a single engine but we have found that having more than 2 DM engines running concurrently can actually have an adverse effect on the overall performance as it puts an undue load on the database.

- Content creation: engine memory should be increased when dealing with large individual documents. The total number of documents is usually not relevant. Each individual document is always handled by a single engine, but a batch of documents can be handled by multiple engines running concurrently. There is no limit to the number of Content Creation engines you can run simultaneously, other than the physical limitations of the underlying hardware.

- Output creation: these engines are used for paginated output as well as to provide conversion services to the rest of the system. The amount of memory to reserve for each engine depends on the overall size of the job and the complexity of the operations requested (for instance, additional content that relies on pre-processing the entire job will require more memory). In most environments, having 2 or 3 Output creation engines is more than sufficient to handle a steady load of incoming jobs.

Hardware

- You should always consider that each engine uses one CPU thread (that is not strictly true, but we’re generalizing, here). So if you have an 8-Core CPU (with 16 threads), you could for instance have 2 DM + 6 CC + 2 OC engines, which would use up 10 of the 16 threads. You want to keep a certain number of cores available for the rest of the system (Workflow, MySQL, Windows, etc.)

- Make sure you have enough RAM for each engine to run. I usually recommend 2GB of RAM per CPU thread (in our example above, we would therefore have 32GB of RAM). That doesn’t mean to say you should assign 2GB of RAM per engine: in most instances, all engines work efficiently with less than 1GB each. But the rest of the system requires memory as well and if your system is under a heavy load, you want to make sure your don’t run out of RAM because then the system will start swapping memory to disk, which is extremely costly performance-wise.

Self replicating processes

The most likely process candidates for self-replication are high-availability, low processing flows. For instance, web-based processes usually require the system to respond very quickly, so they are prime candidates for self-replication. Another example is processes that intercept print queues (or files) and simply route them to different outputs with little or no additional processing.

Processes that handle very large jobs are least likely to benefit from self-replication because each process might hog a number of resources from the system (disk, RAM, DB access) and having several processes fighting for those system rsources concurrently can had an adverse effect on performance.

By default, Workflow allows a maximum of 50 jobs to run concurrently and each self-replicating process can then use a certain percentage of that maxiumum. Obviously, if you have 10 processes that are set to use up to 20% of that maximum, then your processes will be fighting amongst themselves for the next available replicated thread. Note that with modern hardware (let’s stick with our 16vCore/32GB RAM system), you can easily double and even triple that max. On my personal system, I have set my max to 200 concurrent jobs, and that works fine. But that’s because my usage is atypical: I run a lot of performance tests, but I don’t need to prioritize one process over another.

Final note on self-replication: if you are heavily using the PlanetPress Alambic module to generate/manipulate PDFs directly from Workflow, then you should be careful when self-replicating those processes because there is a limit to the number of Alambic engines that can run concurrently. Having 10 cloned processes fighting for 4 instances of Alambic will create a bottleneck.

Conclusion

Remember that all of the above are general rules of thumb. Many other users (and in fact, many of my own colleagues!) would probably tell you that from their own experience, the values I quoted are not the most efficient. But hey, gotta start somewhere!

Hope that helps a bit.