Recently I started investigating using JSON as a data source for the data mapper. As everything is JSON these days it is a good idea to investigate the possibilities for this.

Our current data file that we receive from our other system is still XML so in my workflow I use the XML/JSON Conversion plugin.

There are now 2 issues that I am trying to solve where one involves this plugin and the other involves the data mapper.

Suppose I have the following source XML:

<sample> <item> <mytag1>1</mytag1> <mytag2>2</mytag2> <mytag3>3</mytag3> <mytag4>4</mytag4> </item> <item> <mytag1>5</mytag1> <mytag2>6</mytag2> <mytag3>7</mytag3> <mytag4>8</mytag4> </item> </sample>

(sorry for the formatting - I do not know how I can get the editor to respect enters properly)

After converting via the plugin I get the following JSON:

{ "sample": { "item": [ { "mytag1": "1", "mytag2": "2", "mytag3": "3", "mytag4": "4" }, { "mytag1": "5", "mytag2": "6", "mytag3": "7", "mytag4": "8" } ] } }

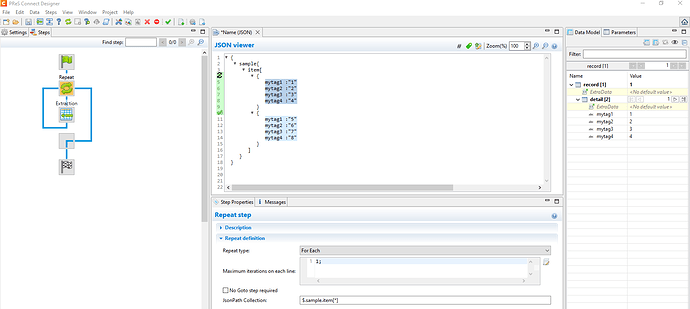

In my datamapper I would like to set a repeat on “sample / item” and use the following Json path Collection: $.sample.item[*]

Other ways to write this would be ".sample.item[*]" or "$..item[*]" which all get the same result.

And it is all perfectly valid according to this website:

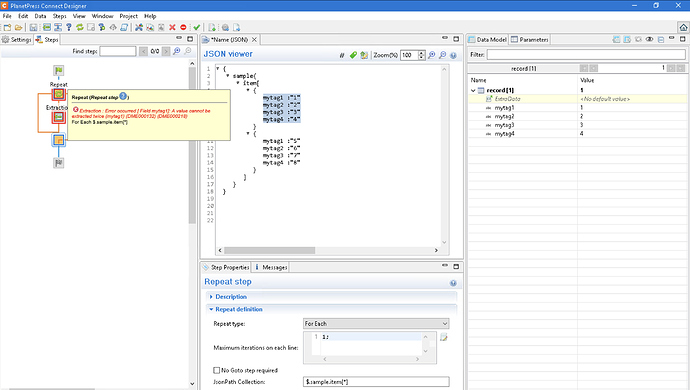

As soon as I add an Extract step within my repeat I get the following message:

Exctraction: Error occured [Field mytag1]: A value cannot be extracted twice (mytag1)

Why does this cause an error and how would I solve this?

My second issue is that the plugin does not know anything about arrays.

So if my XML changed because I have just one section instead of 2 I would get the following XML:

<sample> <item> <mytag1>1</mytag1> <mytag2>2</mytag2> <mytag3>3</mytag3> <mytag4>4</mytag4> </item> </sample>

Running this through the plugin would give me the following JSON:

{ "sample": { "item": { "mytag1": "1", "mytag2": "2", "mytag3": "3", "mytag4": "4" } } }

And now suddenly it is not an array anymore, which makes sense because the plugin does not know that it needs to be an array. But how do I handle this case within the datamapper?