Hi,

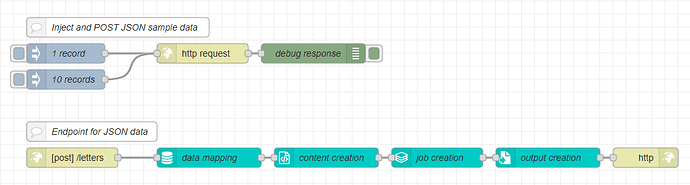

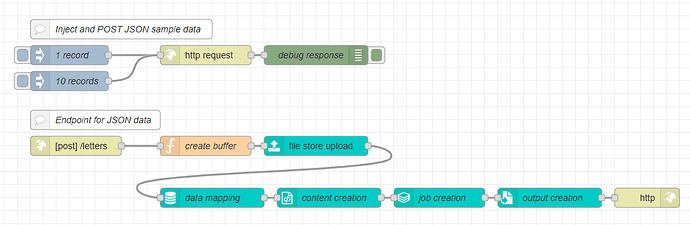

Sorry to spam this forum so much but I would like to get a simple workflow working where I send a JSON body via an API to my Automate workflow, it then gets processed correctly and I end up with a bunch of invoices.

Here is a little background. In my OL Connect Workflow the invoices come in separately one-by-one. The big advantage for this is, that if something goes wrong with one or two, I can get them out of the batch and process them manually. Once the batch of invoices is complete I merge them into a single XML (as our system still outputs XML), I then convert them to JSON and feed them to the all-in-one process as a single file as this is a lot faster than processing the files one by one. Only downside to that is that I then aftwards need to split the invoices separately so I have an output preset for that and also a job preset for some meta data.

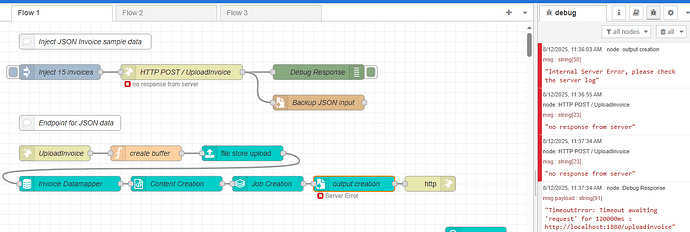

For my OL Connect Workflow this works fine. When I use the all-in-one I get a lot of issues with it. When I leave out the presets I can get a single big PDF file in a zip file, but I want them split. When I add the output preset I can get the Invoices, but they are stored on my server and I don’t see them locally.

Other times I get a Server Error, but when I look at the server log I see no errors. Everything is processed and ends with a summary for the Job Set.

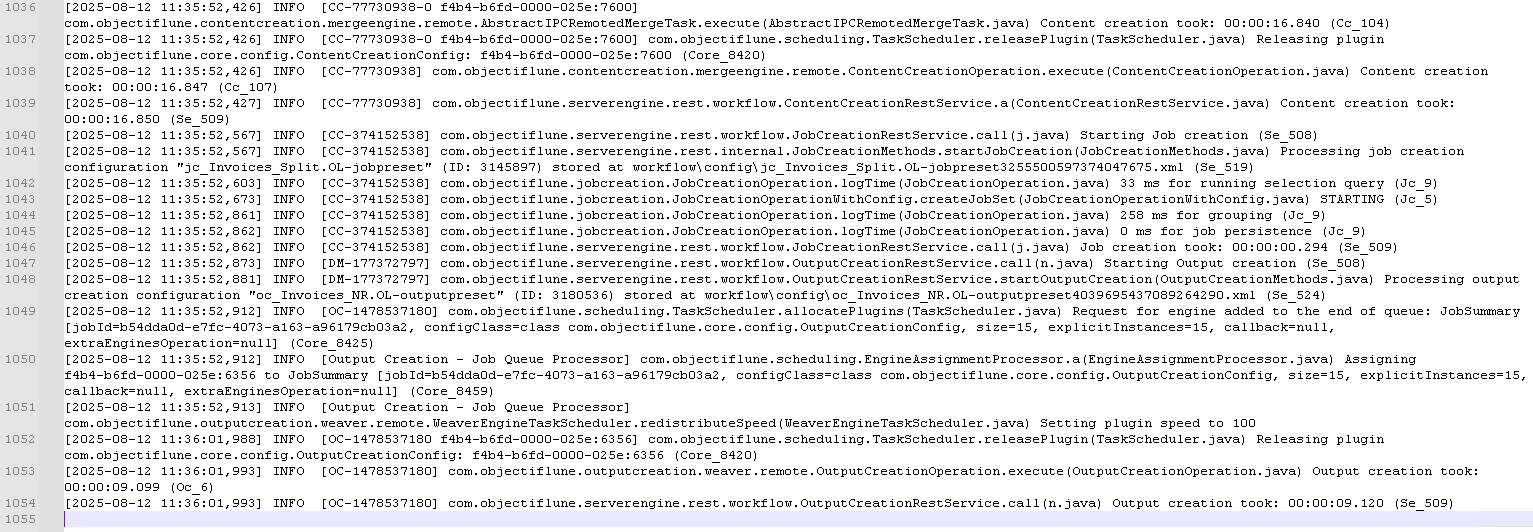

The location I use for the server log is: C:\Users<user account>\Connect\logs\Server.

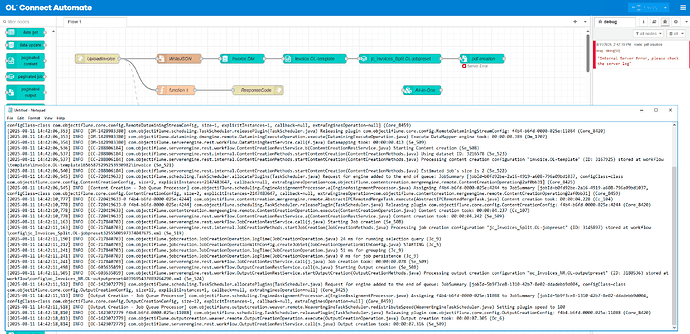

Here is a screenshot with as much details as I could fit on there:

It looks a little different than the all-in-one but overall I do not see an error at all.

For me Automate looks really cool and it is a great tool to convert my workflows in and using APIs and creating endpoints is so much more easier than OL Connect Workflow but even so, using the OL Connect nodes has a pretty steep learning curve unfortunately.

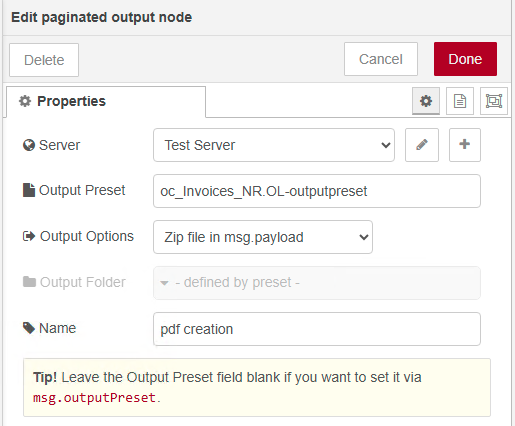

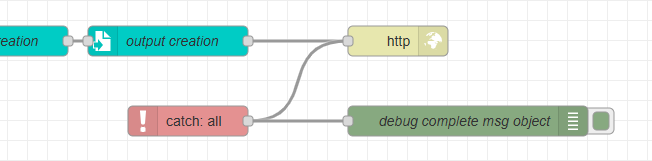

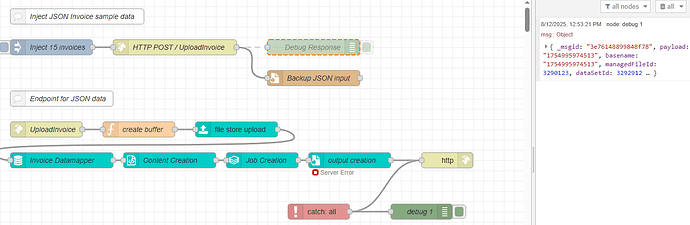

And here is a screenshot for the paginated output node (and the Output Preset is a dropdown now, so that works):

I sometimes get a server error when attaching something after the node so I will try to take it one node at a time and if I for example need anything extra I will add it later, when everything is working. So far all the nodes are working except for the pdf creation node.

When I set the Output Options to “Managed by Output Preset” it will work fine without any errors, except that the zip file ends up on the server (which is not the one where Automate is running), so how will I then get my invoices?