I can tell for a fact that the JSON data you attached is encoded using UTF-8. It means that non-ASCII characters are encoded using between 2 and 4 bytes, usually 2 for Spanish (which it appears to be).

Sadly, all the rest is subject to interpretation. There are a lot of implicit or missing information in your question, all of which are important to understand the context of the problem because, for encoding issues, every single detail counts.

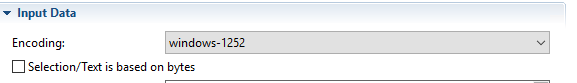

For example, it is not clear where and how the data is being processed. It appears that you have selected to use a Text File format. Are you trying to process the attached JSON with the Data Mapper? If so, why not the native JSON support? If using a text file, selecting windows-1252 means that the byte stream will be interpreted incorrectly; UTF-8 must be selected as the encoding for the JSON data.

But the JSON data actually appears to be the result of a data mapping operation. I am not sure where the third screenshot is coming from, but the JSON is being loaded in Workflow as per the second one. So I am guessing that the JSON data comes from the Data Mapping operation, possibly using the JSON option of the Output Type from the Execute Data Mapping task.

Here is what my psychic debugging powers tell me…

- A text file encoded using windows-1252 is processed by the Data Mapper. This part works correctly.

- The data is retrieved from Connect Server in JSON format as the output from the Execute Data Mapping task. At this point, the job file in Workflow is the attached JSON data file.

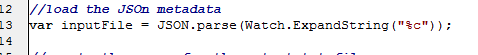

- In a subsequent Run Script task, the JSON file from step 2 is loaded into the script using the method in your screenshot, which is to call

JSON.parse() on the content of the job file retrieved via the %c variable.

- Within the same Run Script task as step 3, the property

fields.Field1 is accessed. This is where the “error with the encoding conversion” occurs, as, for example, Línea is retrieved as LÃnea.

If this is correct, everything is running as intended. The problem is that loading the JSON file through %c tells the script runtime to treat every byte in the string as a character. In other words, multi-byte character are not decoded but kept as-is.

The appropriate way to load a JSON fiel from disk is to treat it as a file encoded in UTF-8, which it is. This can be done using this code.

function readFromFileUtf8(filename) {

var stream = new ActiveXObject("ADODB.Stream");

try {

stream.Type = 2;

stream.CharSet = "utf-8";

stream.Open();

stream.loadFromFile(filename);

var s = stream.ReadText();

} finally {

stream.Close();

}

return s;

}

var jsonText = readFromFileUtf8(Watch.ExpandString("%F"));

var jsonObject = JSON.parse(jsonText);

Watch.Log(jsonObject[0].fields.Field1, 3);

Using the attached data file, this will yield the following result in the Messages area.

[0002] | Línea | Artículo |Unidad | Cantidad |Precio unitario |Importe sin IVA| Divisa |

Alternatively, you may use the Translator plugin in Workflow to convert your JSON data to a code page, which can then be used directly in Workflow, including the Data Selector. Note that this will work only if the data in the JSON file fits within the selected code page, otherwise data loss will occur.